About me

I am currently employed as a Postdoc at Aarhus University in Human-Computer Interaction (HCI) with a focus on the usage of eye-and-hand tracking in Augmented Reality (AR). I have previously worked on multi-modal 3D selection using a combination of eye-tracking and hand-tracking. Additionally, I have several collaborations with researchers in the field of HCI, including research on adaptive user interfaces.

Research interests: Eye-tracking, Augmented Reality, Virtual Reality, Interaction Techniques, Study Design.

Publications

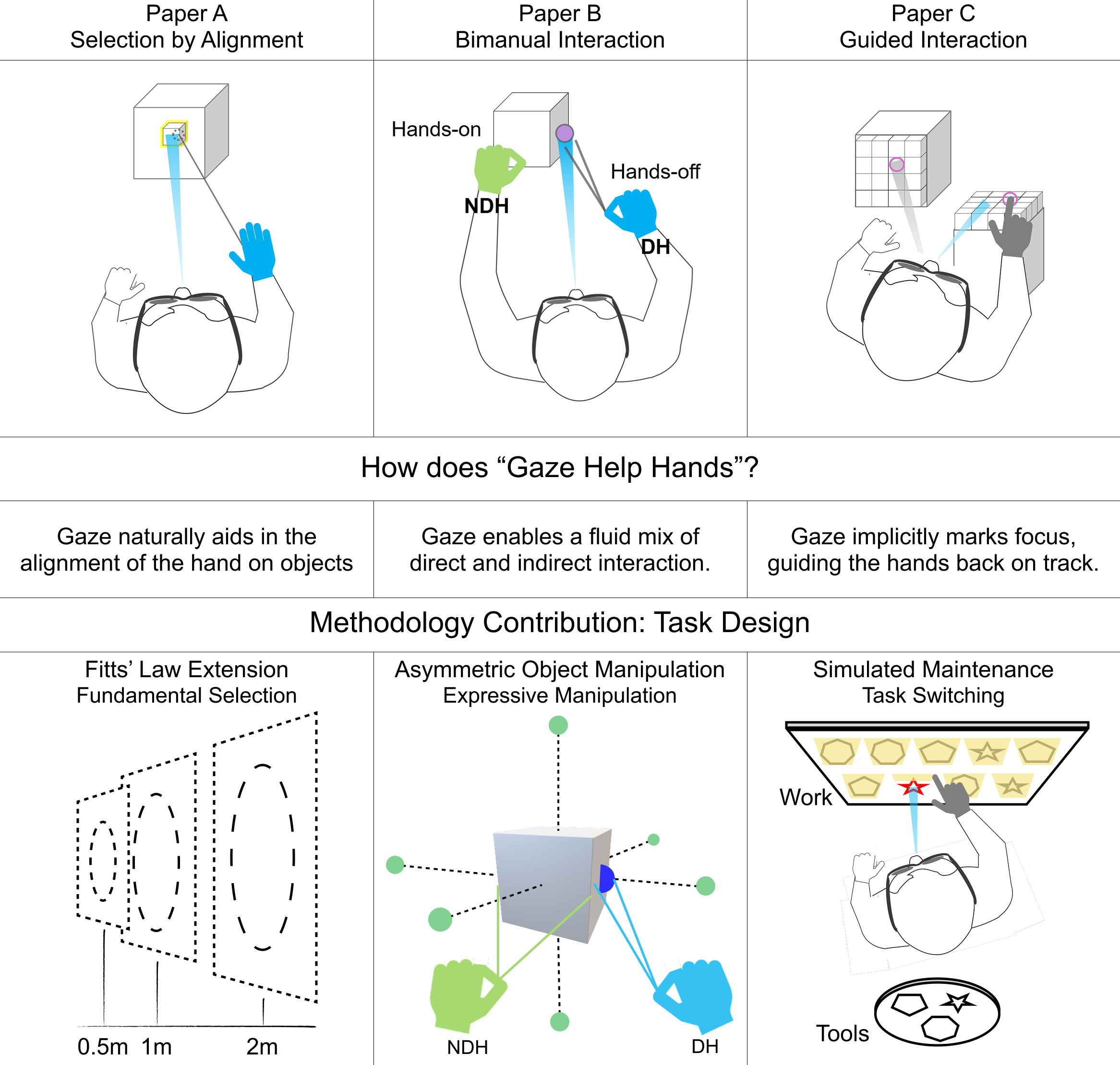

Eyes Helping Hands: Gaze-Assisted Hand Interaction in Extended Reality

Mathias N Lystbæk

When we interact with our environment, we use not only our hands but also our eyes. Our eyes play a major role in everyday interaction, locating areas of interest and guiding our hands before movement begins. Extended Reality (XR) promises a seamless blend of physical and digital worlds, visualising virtual components in our real or virtual environment. In this thesis, I investigate "Eyes Helping Hands" through three explorations of gaze assistance in XR interaction. My contributions demonstrate that by designing interactions leveraging natural eye-hand coordination, we can create XR interactions that are familiar to users while being performant and ergonomic. This thesis opens new directions for attention-aware XR systems and establishes eye-hand coordination as a core design consideration for future XR applications.

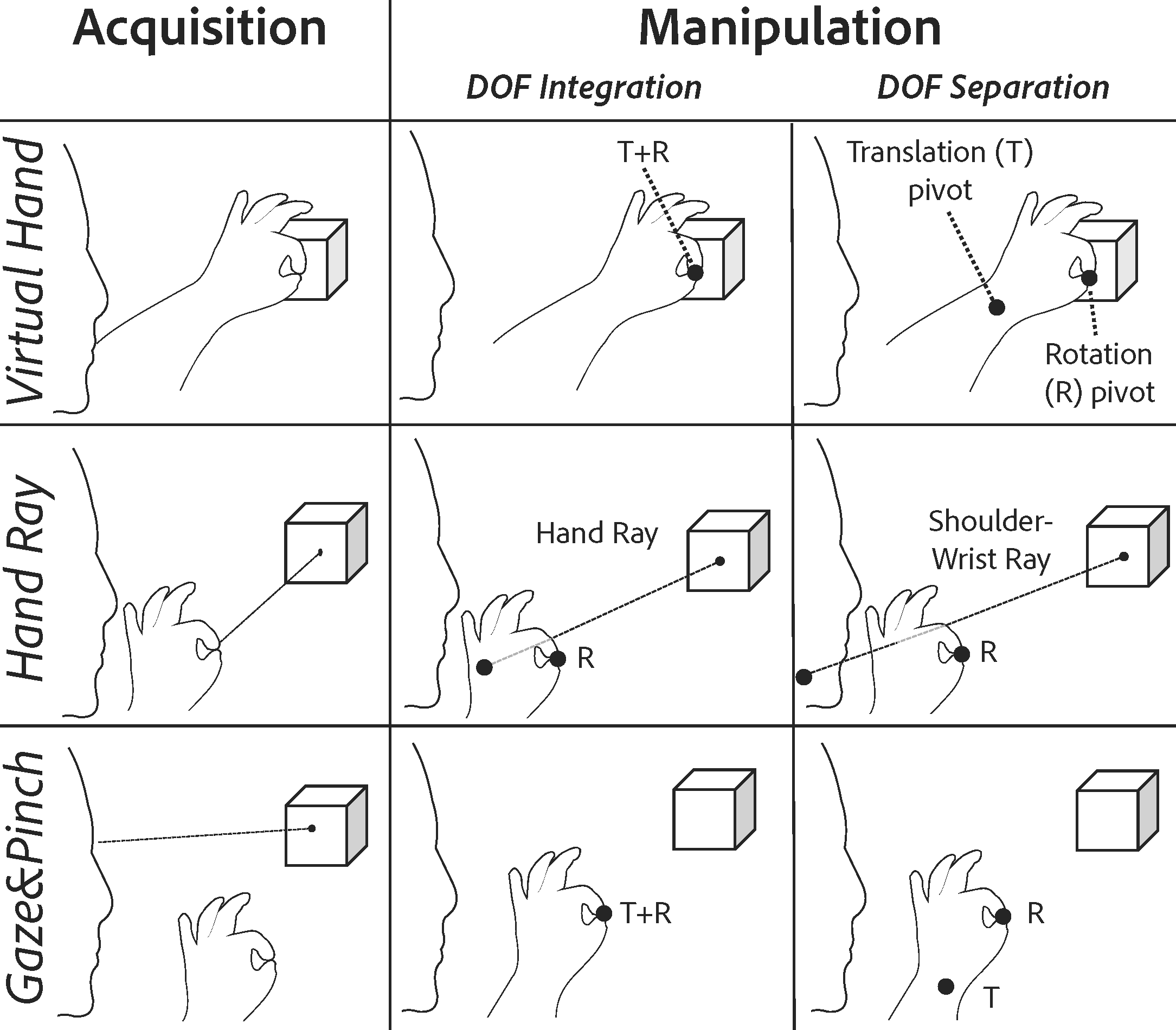

DOF-Separation for 3D Manipulation in XR: Understanding Finger-Wrist Separation to Simultaneously Translate and Rotate Objects

Thorbjørn Mikkelsen, Qiushi Zhou, Mathias N Lystbæk, Yang Liu, Hans Gellersen, and Ken Pfeuffer

Hand-tracking based 3D object manipulation in Extended Reality (XR) typically employs a pinch gesture for acquisition and manipulation through a direct mapping from 6-degrees-of-freedom (DOF) hand movement to that of the object. In this work, we investigate the effect of separating this mapping to concurrent 3DOF controls (DOF-Separation) of translation and rotation of the virtual object using the position and orientation of the hand independently. We aim to understand how DOF-Separation could ease manipulation for different techniques with varying requirements for hand position and orientation during acquisition, including Virtual Hand, Hand Ray, and Gaze&Pinch. Through a user study that features a docking task in VR, we found that DOF-Separation significantly improves the manipulation performance of Hand Ray, while improving that of Virtual Hand only in difficult tasks of combined translation and rotation. We suggest future XR systems to adopt DOF-Separation for input in manipulation-heavy applications, such as 3D design.

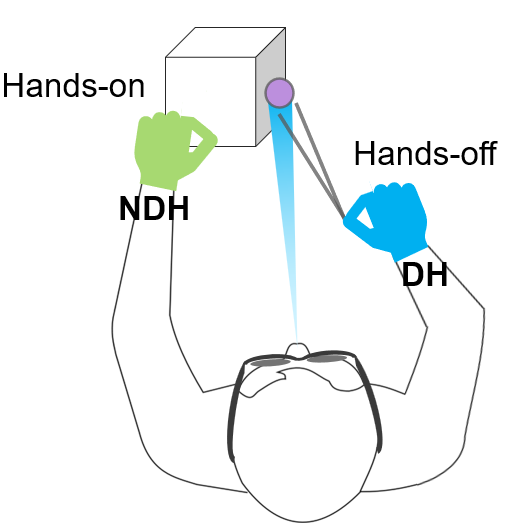

Hands-on, Hands-off: Gaze-Assisted Bimanual 3D Interaction

Mathias Lystbæk, Thorbjørn Mikkelsen, Roland Krisztandl, Eric J. Gonzalez, Mar Gonzales-Franco, Hans Gellersen, and Ken Pfeuffer

Extended Reality (XR) systems with hand-tracking support direct manipulation of objects with both hands. A common interaction in this context is for the non-dominant hand (NDH) to orient an object for input by the dominant hand (DH). We explore bimanual interaction with gaze through three new modes of interaction where the input of the NDH, DH, or both hands is indirect based on Gaze+Pinch. These modes enable a new dynamic interplay between our hands, allowing flexible alternation between and pairing of complementary operations. Through applications, we demonstrate several use cases in the context of 3D modelling, where users exploit occlusion-free, low-effort, and fluid two-handed manipulation. To gain a deeper understanding of each mode, we present a user study on an asymmetric rotate-translate task. Most participants preferred indirect input with both hands for lower physical effort, without a penalty on user performance. Otherwise, they preferred modes where the NDH oriented the object directly, supporting preshaping of the hand, which is more challenging with indirect gestures. The insights gained are of relevance for the design of XR interfaces that aim to leverage eye and hand input in tandem.

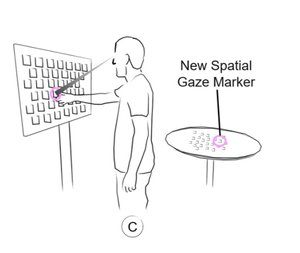

Spatial Gaze Markers: Supporting Effective Task Switching in Augmented Reality

Mathias Lystbæk, Ken Pfeuffer, Tobias Langlotz, Jens Emil Grønbæk, and Hans Gellersen

Task switching can occur frequently in daily routines with physical activity. In this paper, we introduce Spatial Gaze Markers, an augmented reality tool to support users in immediately returning to the last point of interest after an attention shift. The tool is task-agnostic, using only eye-tracking information to infer distinct points of visual attention and to mark the corresponding area in the physical environment. We present a user study that evaluates the effectiveness of Spatial Gaze Markers in simulated physical repair and inspection tasks against a no-marker baseline. The results give insights into how Spatial Gaze Markers affect user performance, task load, and experience of users with varying levels of task type and distractions. Our work is relevant to assist physical workers with simple AR techniques and render task switching faster with less effort.

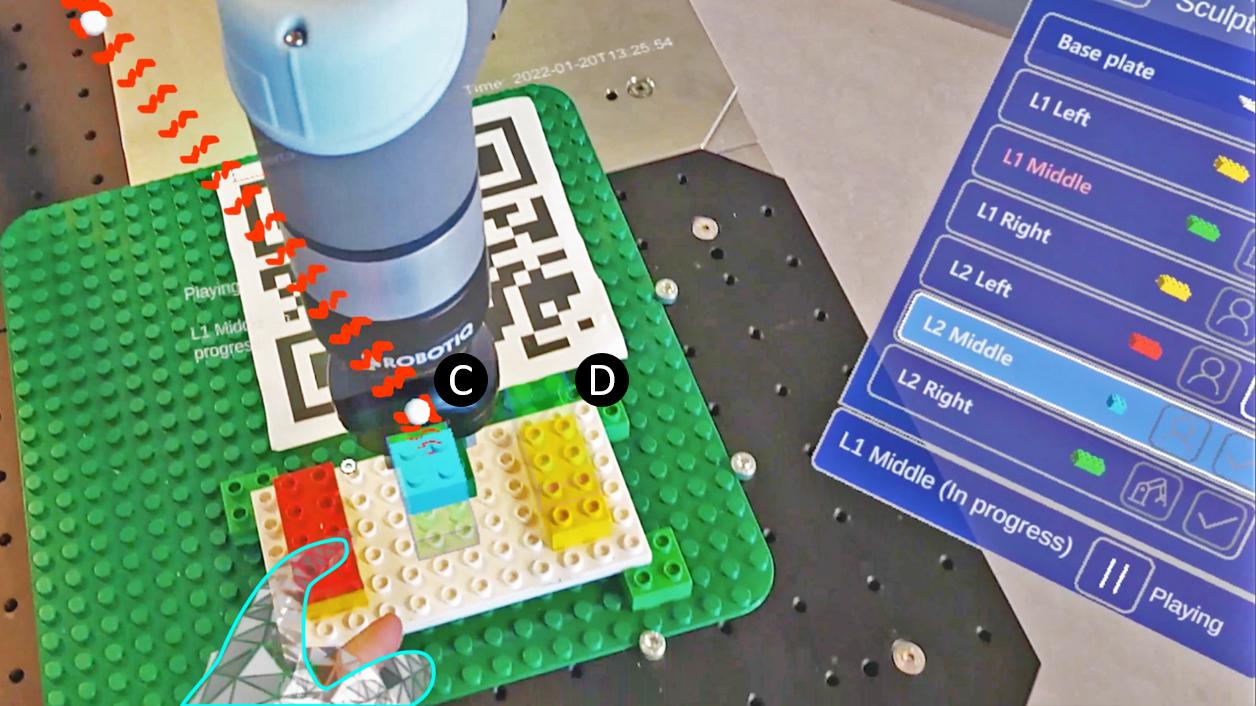

AR-supported Human-Robot Collaboration: Facilitating Workspace Awareness and Parallelized Assembly Tasks

Rasmus S. Lunding, Mathias N. Lystbæk, Tiare Feuchtner, and Kaj Grønbæk

While technologies for human-robot collaboration are rapidly advancing, plenty of aspects still need further investigation, such as ensuring workspace awareness, enabling the operator to reschedule tasks on the fly, and how users prefer to coordinate and collaborate with robots. To address these, we propose an Augmented Reality interface that supports human-robot collaboration in an assembly task by (1) enabling the inspection of planned and ongoing robot processes through dynamic task lists and a path visualization, (2) allowing the operator to also delegate tasks to the robot, and (3) presenting step-by-step assembly instructions. We evaluate our AR interface in comparison to a state-of-the-art tablet interface in a user study, where participants collaborated with a robot arm in a shared workspace to complete an assembly task. Our findings confirm the feasibility and potential of AR-assisted human-robot collaboration, while pointing to some central challenges that require further work.

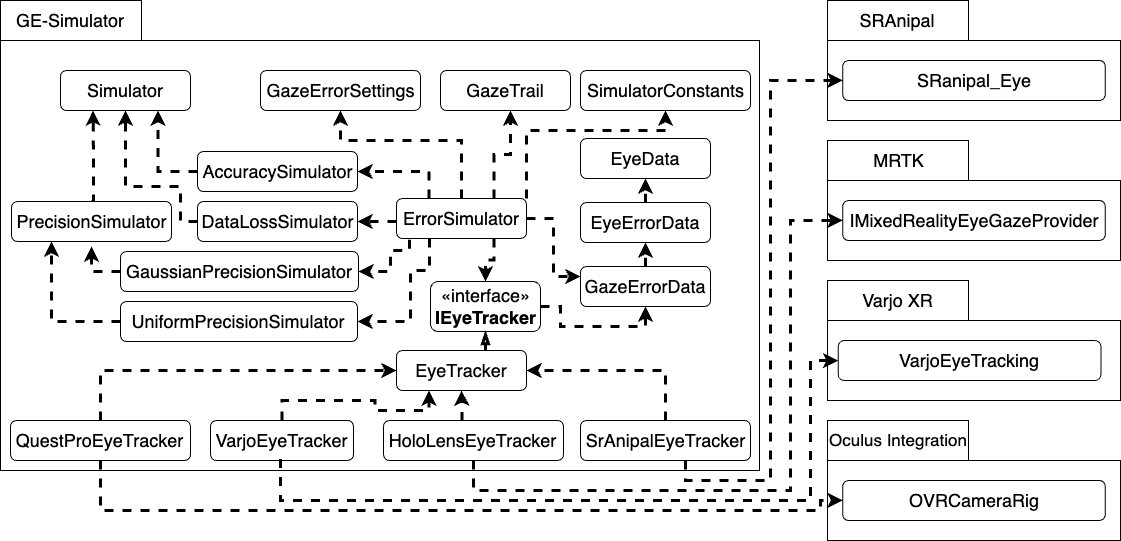

GE-Simulator: An Open-Source Tool for Simulating Real-Time Errors for HMD-based Eye Trackers

Ludwig Sidenmark, Mathias N. Lystbæk, and Hans Gellersen

As eye tracking in augmented and virtual reality (AR/VR) becomes established, it will be used by broader demographics, increasing the likelihood of tracking errors. Therefore, it is important when designing eye tracking applications or interaction techniques to test them at different signal quality levels to ensure they function for as many people as possible. We present GE-Simulator, a novel open-source Unity toolkit that allows the simulation of accuracy, precision, and data loss errors during real-time usage by adding gaze vector errors into the gaze vector from the head-mounted AR/VR eye tracker. The tool is customisable without having to change the source code and changes in eye tracking errors during and in-between usage. Our toolkit allows designers to prototype new applications at different levels of eye tracking in the early phases of design and can be used to evaluate techniques with users at varying signal quality levels.

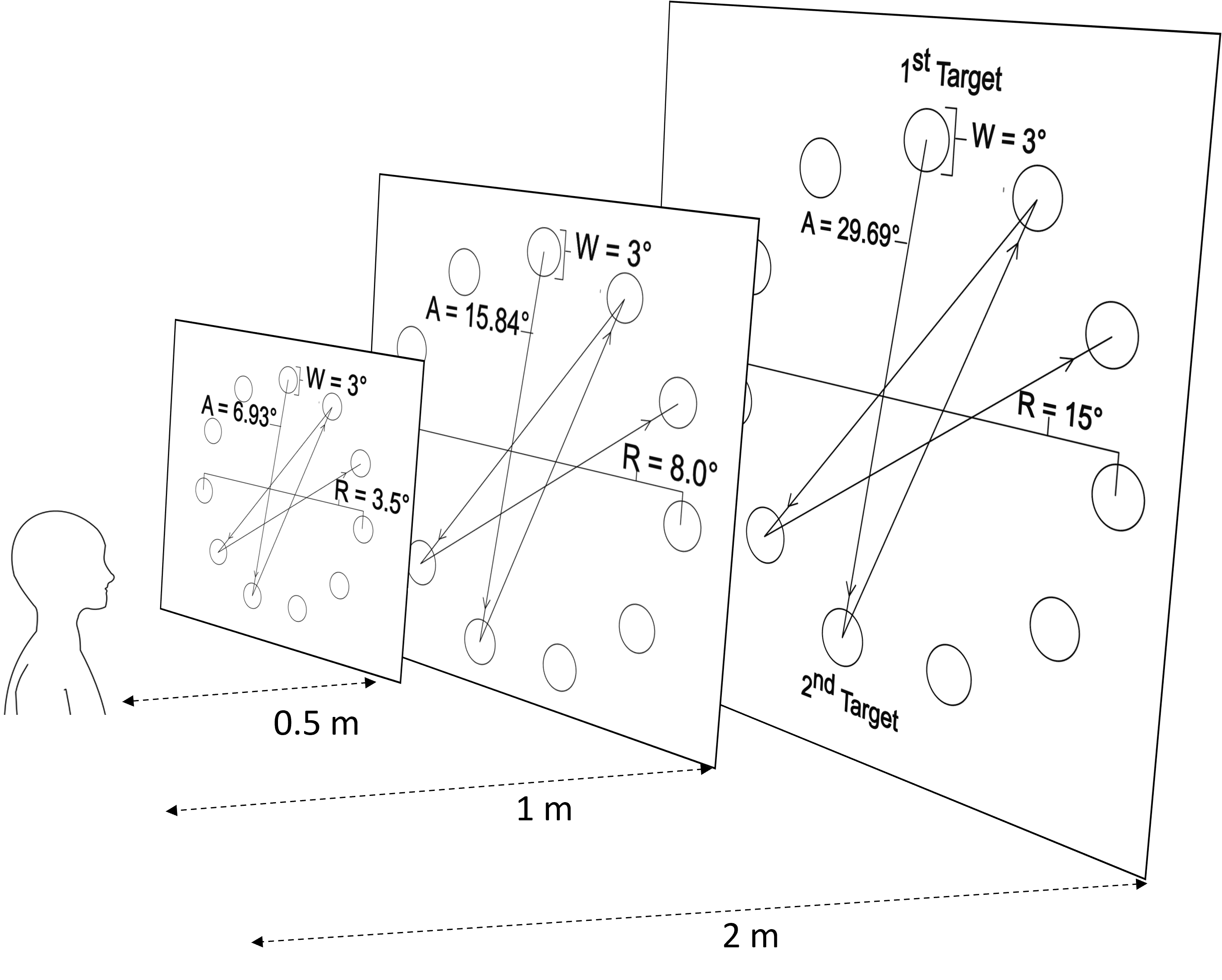

A Fitts’ Law Study of Gaze-Hand Alignment for Selection in 3D User Interfaces

Uta Wagner, Mathias N. Lystbæk, Pavel Manakhov, Jens Emil Sloth Grønbæk, Ken Pfeuffer, and Hans Gellersen

Gaze-Hand Alignment has recently been proposed for multimodal selection in 3D. The technique takes advantage of gaze for target pre-selection, as it naturally precedes manual input. Selection is then completed when manual input aligns with gaze on the target, without need for an additional click method. In this work we evaluate two alignment techniques, Gaze&Finger and Gaze&Handray, combining gaze with image plane pointing versus raycasting, in comparison with hands-only baselines and Gaze&Pinch as established multimodal technique. We used Fitts’ Law study design with targets presented at different depths in the visual scene, to assess effect of parallax on performance. The alignment techniques outperformed their respective hands-only baselines. Gaze&Finger is efficient when targets are close to the image plane but less performant with increasing target depth due to parallax.

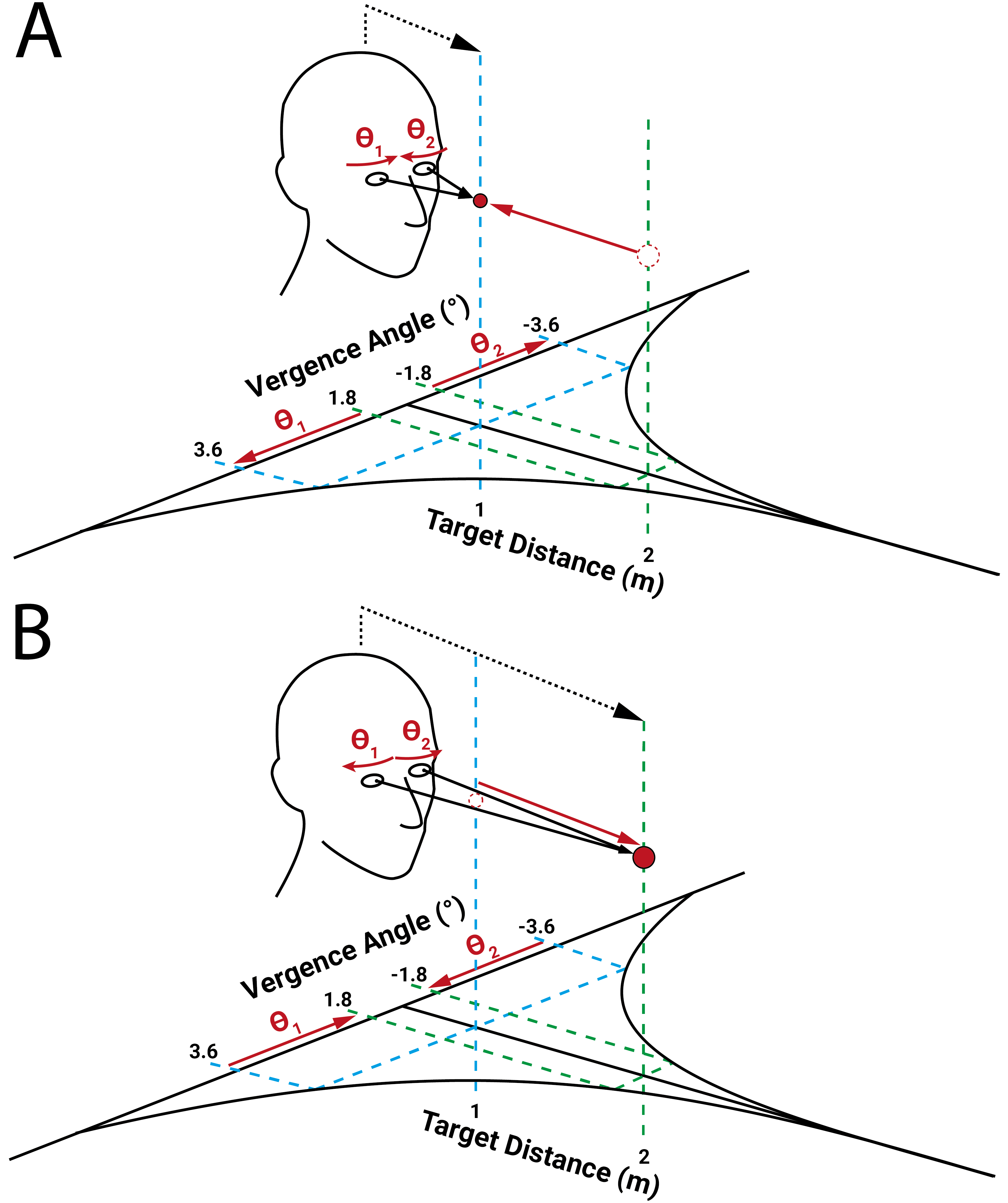

Vergence Matching: Inferring Attention to Objects in 3D Environments for Gaze-Assisted Selection

Ludwig Sidenmark, Christopher Clarke, Joshua Newn, Mathias N. Lystbæk, Ken Pfeuffer, and Hans Gellersen

Gaze pointing is the de facto standard to infer attention and interact in 3D environments but is limited by motor and sensor limitations. To circumvent these limitations, we propose a vergence-based motion correlation method to detect visual attention toward very small targets. Smooth depth movements relative to the user are induced on 3D objects, which cause slow vergence eye movements when looked upon. Using the principle of motion correlation, the depth movements of the object and vergence eye movements are matched to determine which object the user is focussing on. In two user studies, we demonstrate how the technique can reliably infer gaze attention on very small targets, systematically explore how different stimulus motions affect attention detection, and show how the technique can be extended to multi-target selection. Finally, we provide example applications using the concept and design guidelines for small target and accuracy-independent attention detection in 3D environments.

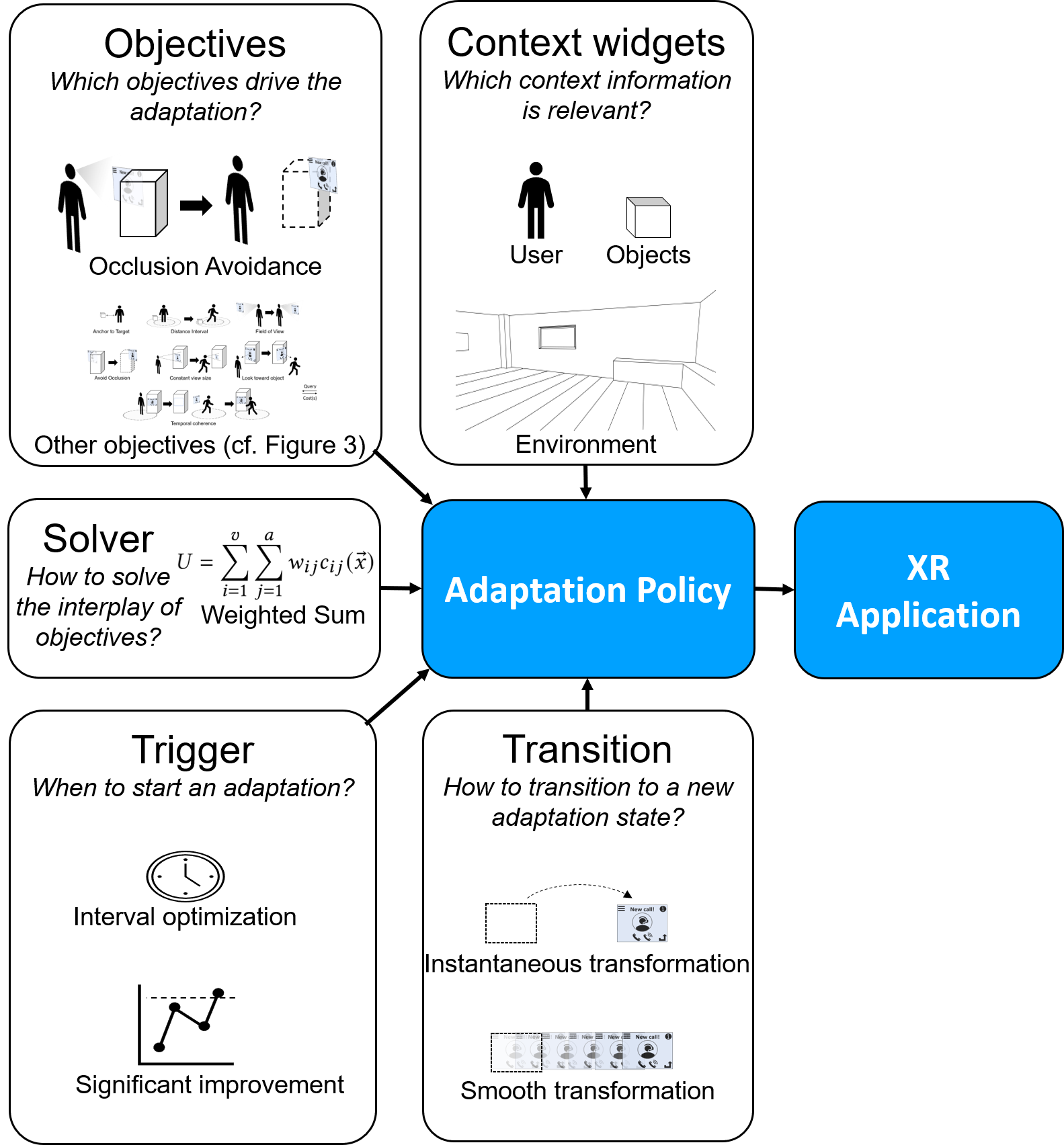

AUIT – the Adaptive User Interfaces Toolkit for Designing XR Applications

Joao Marcelo Evangelista Belo, Mathias Nørhede Lystbæk, Anna Maria Feit, Ken Pfeuffer, Peter Kán, Antti Oulasvirta, and Kaj Grønbæk

Adaptive user interfaces can improve experiences in Extended Reality (XR) applications by adapting interface elements according to the user’s context. Although extensive work explores different adaptation policies, XR creators often struggle with their implementation, which involves laborious manual scripting. The few available tools are underdeveloped for realistic XR settings where it is often necessary to consider conflicting aspects that affect an adaptation. We fill this gap by presenting AUIT, a toolkit that facilitates the design of optimization-based adaptation policies. AUIT allows creators to flexibly combine policies that address common objectives in XR applications, such as element reachability, visibility, and consistency. Instead of using rules or scripts, specifying adaptation policies via adaptation objectives simplifies the design process and enables creative exploration of adaptations. After creators decide which adaptation objectives to use, a multi-objective solver finds appropriate adaptations in real-time. A study showed that AUIT allowed creators of XR applications to quickly and easily create high-quality adaptations.

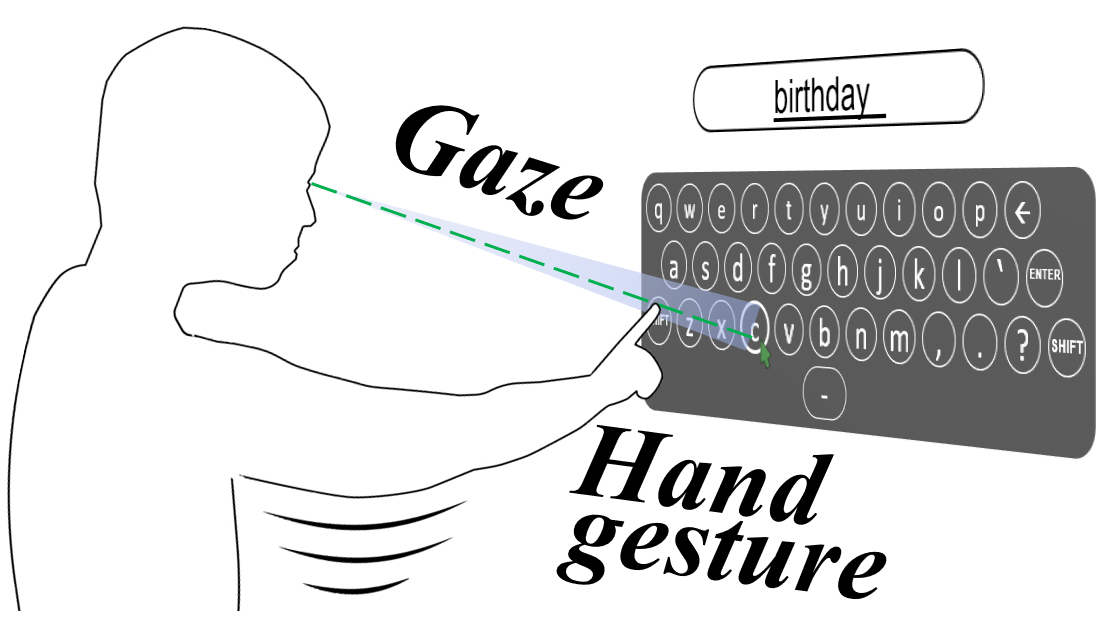

Exploring Gaze for Assisting Freehand Selection-based Text Entry in AR

Mathias N. Lystbæk, Ken Pfeuffer, Jens Emil Grønbæk, and Hans Gellersen

With eye-tracking increasingly available in Augmented Reality, we explore how gaze can be used to assist freehand gestural text entry. Here the eyes are often coordinated with manual input across the spatial positions of the keys. Inspired by this, we investigate gaze-assisted selection-based text entry through the concept of spatial alignment of both modalities. Users can enter text by aligning both gaze and manual pointer at each key, as a novel alternative to existing dwell-time or explicit manual triggers. We present a text entry user study comparing two of such alignment techniques to a gaze-only and a manual-only baseline. The results show that one alignment technique reduces physical finger movement by more than half compared to standard in-air finger typing, and is faster and exhibits less perceived eye fatigue than an eyes-only dwell-time technique. We discuss trade-offs between uni and multimodal text entry techniques, pointing to novel ways to integrate eye movements to facilitate virtual text entry.

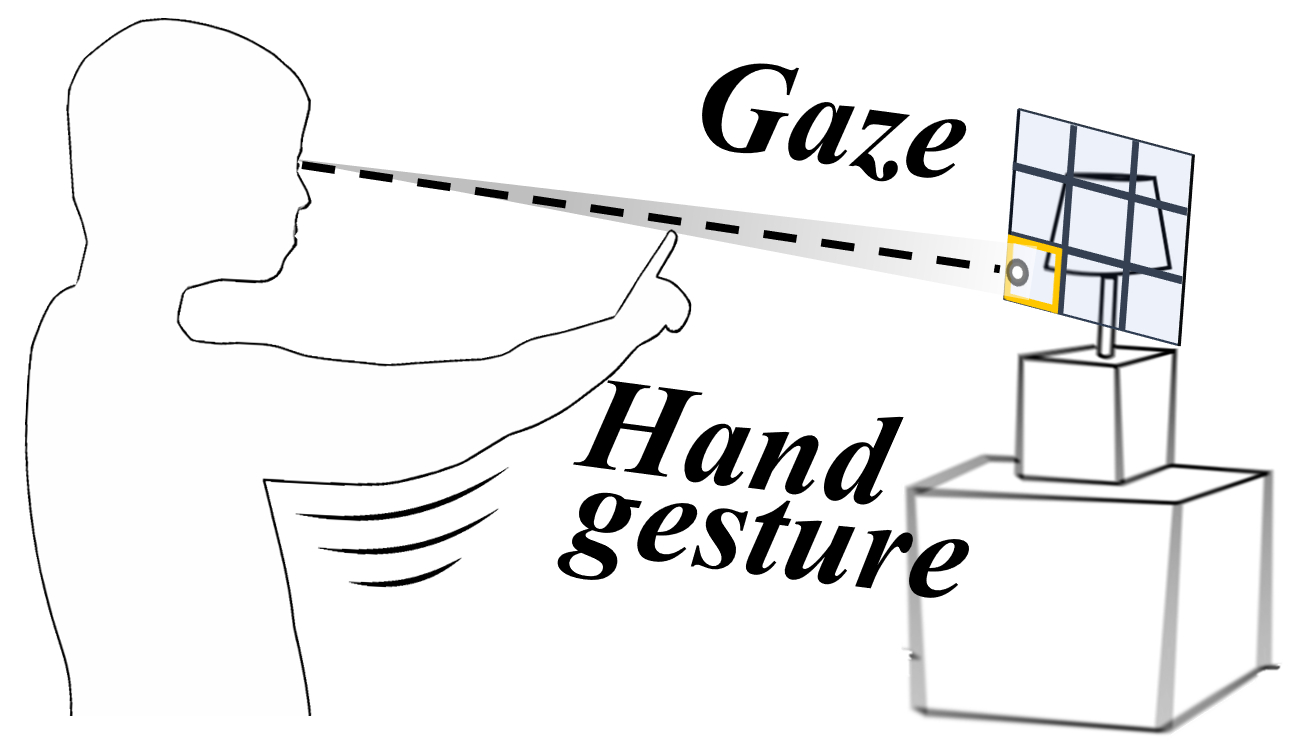

Gaze-Hand Alignment: Combining Eye Gaze and Mid-Air Pointing for Interacting with Menus in Augmented Reality

Mathias N. Lystbæk, Peter Rosenberg, Ken Pfeuffer, Jens Emil Grønbæk, and Hans Gellersen

Gaze and freehand gestures suit Augmented Reality as users can interact with objects at a distance without need for a separate input device. We propose Gaze-Hand Alignment as a novel multimodal selection principle, defined by concurrent use of both gaze and hand for pointing and alignment of their input on an object as selection trigger. Gaze naturally precedes manual action and is leveraged for pre-selection, and manual crossing of a pre-selected target completes the selection. We demonstrate the principle in two novel techniques, Gaze&Finger for input by direct alignment of hand and finger raised into the line of sight, and Gaze&Hand for input by indirect alignment of a cursor with relative hand movement. In a menu selection experiment, we evaluate the techniques in comparison with Gaze&Pinch and a hands-only baseline. The study showed the gaze-assisted techniques to outperform hands-only input, and gives insight into trade-offs in combining gaze with direct or indirect, and spatial or semantic freehand gestures.

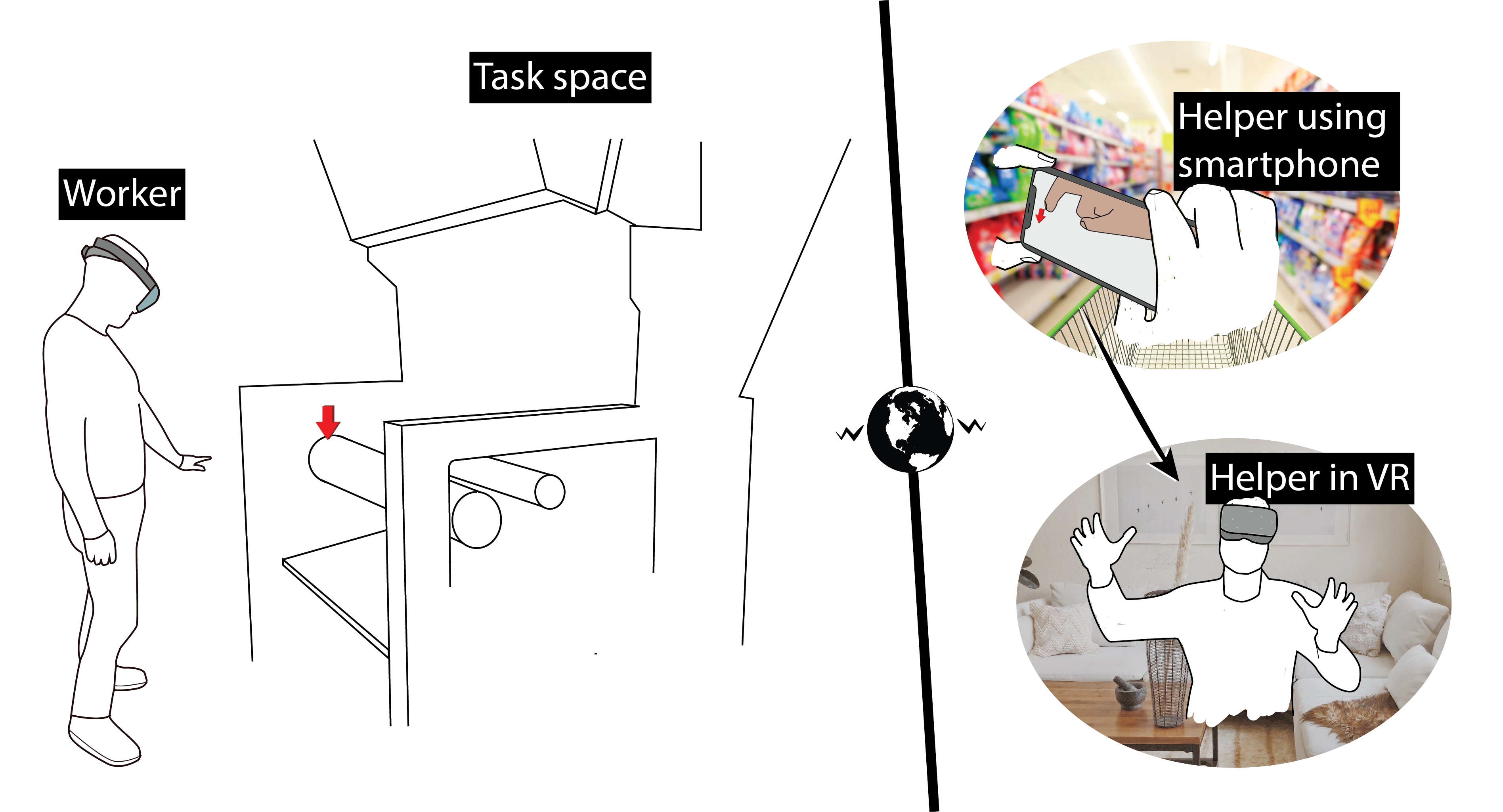

Challenges of XR Transitional Interfaces in Industry 4.0

Joao Marcelo Evangelista Belo, Tiare Feuchtner, Chiwoong Hwang, Rasmus Skovhus Lunding, Mathias Nørhede Lystbæk, Ken Pfeuffer, and Troels Ammitsbøl Rasmussen

Past work has demonstrated how different Reality-Virtuality classes can address the requirements posed by Industry 4.0 scenarios. For example, a remote expert assists an on-site worker in a troubleshooting task by viewing a video of the workspace on a computer screen, but at times switches to a VR headset to take advantage of spatial deixis and body language. However, only little attention has been paid to the question of how to transition between multiple classes. Ideally the benefit of making a transition should outweigh the transition cost. User support for Reality-Virtuality transitions can advance the integration of XR in current industrial work processes – particularly in scenarios from the manufacturing industry, where worker safety concerns, efficiency, error reduction, and adhering to company polices are critical success factors. Therefore, in this position paper, we discuss three scenarios from the manufacturing industry that involve transitional interfaces. Based on these, we propose design considerations and reflect on challenges for seamless transitional interfaces.